Refactoring the Zeebe Node gRPC State Machine for Camunda Cloud: Part Two

Building a state machine with business rules to deal with connection characteristics

See Part One of this series here.

I’m refactoring the Zeebe Node client to give it the ergonomic excellence that developers have come to expect from the library, when running it against Zeebe clusters in Camunda Cloud.

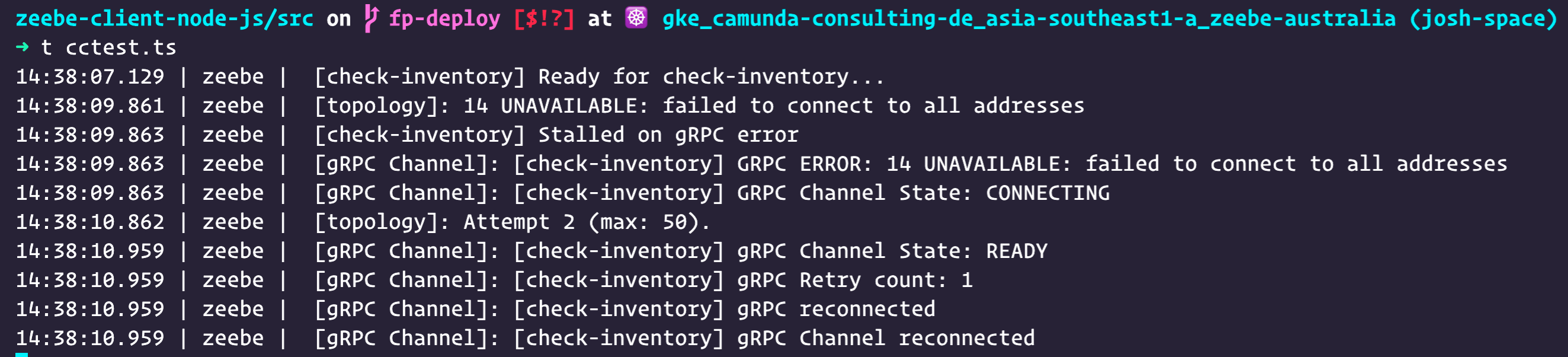

Right now, here is what it looks like connecting to Camunda Cloud with the Zeebe Node client:

Boo!

The gRPC connection with Camunda Cloud has different characteristics to self-hosted Zeebe, particularly on initial connection, and there is nowhere in the current Node client architecture to model different expectations of connection behaviour. So I am adding a “Connection Characteristics Middleware” to it.

The next step in doing the refactor of the gRPC connection management is to refamiliarise myself with its current operation.

I did this by reading through the current source code of the GRPCClient class and creating a BPMN diagram that models its behaviour.

BPMN - “Business Process Model and Notation” is an XML standard for modelling business processes that can be rendered graphically. BPMN is perfect for modelling state machines, because that’s what a business model is.

I used the Camunda Modeler to create the diagram. It has more symbol support than the Zeebe modeler currently does. I’m not intending to execute this diagram, so I don’t need to worry about compatibility.

After creating a model of the current behaviour, I uploaded it to Cawemo, Camunda’s web-based collaborative modeling platform. Here it is:

Sidenote: Hugo Shortcode for Cawemo models

To get the model to embed in my blog, I wrote a Hugo shortcode:

Model symbols

The gRPC State Machine is heavily asynchronous, and event-driven - as you can imagine. I modelled callbacks as subprocesses, and noted when something is a Promise.

Escalation to the new “Connection Characteristics Business Rules Middleware” that we are building are noted using the Escalation Event symbol.

Signals are used to trigger the debouncing connected (ready) / disconnected (error) state machines.

Preliminary Conclusion

Looking over it, I’m actually pretty happy with the design. I’m not surprised though - I am pretty good. At least most of the time. And I did refine that state machine continuously over the last year, as I used it in production.

There are some obvious escalation points, and it looks like the refactor is going to be mostly lifting the inline logging out of the GRPCClient class and putting it in the middleware, where we encapsulate the business rules around connection characteristics.

Start Mashing Keys

I think I will create a GrpcConnectionFactory class. Instead of directly instantiating a gRPC client as it does now, the ZBClient will get one from this factory.

The factory will be responsible for instantiating the GRPCClient and a Middleware component with the correct profile; wiring them up; and then passing back the wired up client to the ZBClient. That makes my refactor of the ZBClient code minimal: I just replace all new GRPCClient() calls with GrpcConnectionFactory.getClient().

While I’m at it, I will rename the GRPCClient class to GrpcClient to break all existing references and normalise my naming.

I will make the Middleware component responsible for detecting the correct profile to use, determining what constitutes exceptional behaviour of the gRPC connection, and logging it out.

This will mean injecting the configured logger into it, but we’re already doing that with the GRPCClient - I mean GrpcClient - so not much change there.

The GrpcClient will no longer log exceptions. Instead it will emit events, and the Middleware component will log them, or supress them, and will also have to maintain some state to determine if an error event during connection is final (after a timeout), or was transient. So it needs to be stateful.

From my reading of the source code, it looks like I just replace logging in the GrpcClient with events emitted to the Middleware.

It would be good to be able to make the GrpcClient class private to a module or namespace, but I don’t know if you can do that in TypeScript without putting them in the same file. Anyway, the linter settings in the project disallow modules and namespaces. And it’s not exported from the library, so whatevs.

GrpcConnectionFactory

First cut, I just make a class with a static method getGrpcClient, have it take the same args as the GrpcClient constructor, and return a new instance of the class:

And just like that, we have created a new level of indirection - the fundamental theorem of software engineering:

“We can solve any problem by introducing an extra level of indirection.”

GrpcMiddleware

OK, so this needs to be a stateful class. Let’s create some profile types first, and some characteristic profiles.

I’ll use a string literal union type for the profile names, and key the characteristics map using them.

I don’t know what shape the characteristics rules will have yet, so I’ll type them any for now, but using an alias so that I can update the typing across the entire system when I know what it is:

OK, now the class. It will take in the entire config being passed to the GrpcClient constructor, and we can do an initial naive detection by looking for the address of Camunda Cloud in the host string:

Actually, I need to get the GrpcClient in here. I could move the detection up to the factory, and then pass in the profile and the GrpcClient at the same time - using a parameter object of course.

Here it what it looks like after that refactor:

The unassigned call to a new GrpcMiddleware looks a bit weird. We won’t have a reference to it, but it won’t get garbage collected because it will attach event listeners to the GrpcClient.

We can test the GrpcMiddleware by passing in a mock event emitter as the GrpcClient.

Logging

Next, I rip out the logging from GrpcClient, and put it into the GrpcMiddleware. Then I just look for log calls in GrpcClient and turn them into events for the middleware.

Oh, OK - to configure the logger with the right namespace, I need the config. OK, I guess I delegate creation of the client to the GrpcMiddleware after all.

Now the factory looks like this:

OK, I create a new ZBLogger in the middleware. Now it looks like this:

Events

The GrpcClient already emits events. You can chain event listeners to instances of ZBClient and ZBWorker to react to connection (‘ready’) and disconnection (‘error’) events already. I can’t change the names of those, because they are a public API.

Update: man, emitting an ‘error’ event caused my program to inexplicably crash and had me debugging for 30 minutes. Eventually, I tracked it down, and consulted the README - the event is connectionError. Emitting ‘error’ with no listeners throws.

I should namespace events for the Middleware so it clear where they are going, rather than using plain strings.

I will have to redirect all events through the middleware, actually - otherwise custom listeners on clients connected to Camunda Cloud will not get the benefit of the middleware.

This poses a challenge, actually. I can trap the current event emitters in the middleware, but since the ZBClient only has access to the GrpcClient, and not the middleware, I will need to re-emit them through the GrpcClient from the middleware. I’ve never tried that. Let me see if it can be done…

OK, my IDE is telling me I can call this.GrpcClient.emit('error'), so we will redirect all events through the middleware.

I will create namespaced events for the existing events as well as the new ones, which will break the connection right now, and then re-emit them from the middleware later. So in the GrpcClient:

And here is an example of redirecting the existing log messages and events in GrpcClient to the new Middleware:

Now I can modify my Middleware to construct an intercepted GrpcClient that behaves just like the existing one. This is a good checkpoint, because I can verify my hypotheses and work so far by running the standard unit tests. So I make my GrpcMiddleware component like this:

Event-driven: Spooky Action at a Distance

When React first came out, one of the patterns to make your components communicate at a distance across the component hierarchy was to emit events. That pattern went out, because in lead to unpredictable and hard to reason about architectures.

Adding namespaced events helps us out here, but - apart from the hell I just created for myself changing filename casings on a case-insensitive file system - the GrpcClient just went into a stackoverflow loop.

It turns out that it listens to the same signals internally that it uses to communicate publicly. As soon as I intercepted them in the middleware and rebroadcast them, it went into a loop.

I fixed that by changing the string value of the Middleware namespaced events - but it means that those events are not only informing the world, but also informing parts of GrpcClient.

I’m not going to fix that now (just like I didn’t fix it then). It’s technical debt though. Changes to the “Middleware-facing” events will have unpredictable side-effects on the GrpcClient behaviour. I will have to fix those at some point.

On second thoughts, something something write the best code you can at the time that you write it.

Architecture diagram in hand, I carefully trace the logic, and create namespaced InternalSignal events for GrpcClient to use internally.

Hours later…

Don’t emit an event named ‘error’ from an event emitter with no listeners attached, otherwise your code will throw. Just so you know…

In the end, I realised that the GrpcClient and the ZBLogger are so tightly coupled that they need to come at the same time from the same place.

I put the state management into a component called StatefulLogInterceptor. The ConnectionFactory static class takes a Grpc configuration and a Log configuration, and returns a wired GrpcClient and ZBLogger. They are wired together via a StatefulLogInterceptor.

Check it out:

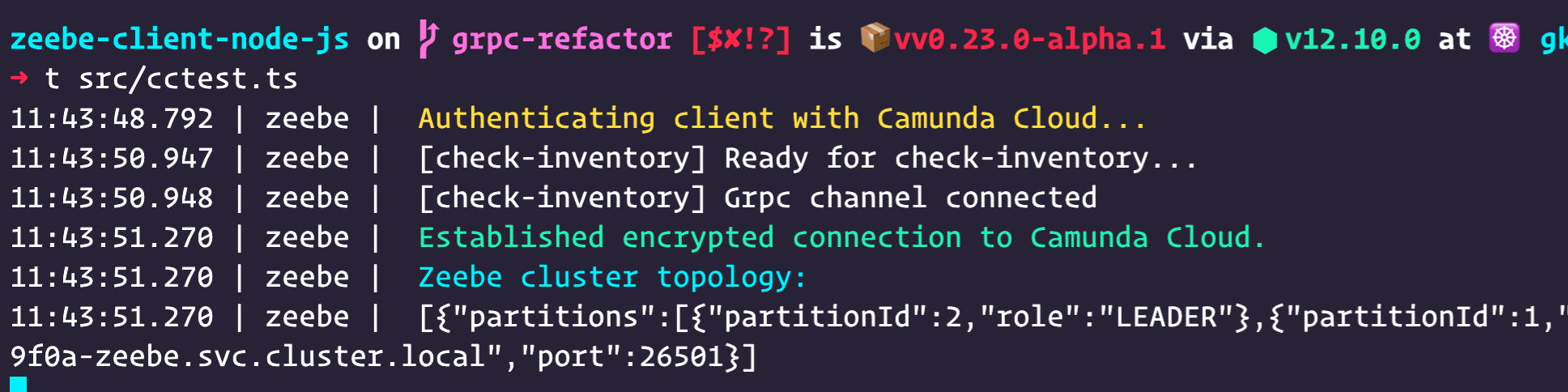

Before

After

Under the hood, the behaviour of the gRPC connection is exactly the same. However, now the library detects that it is connecting to Camunda Cloud, and changes its output while the connection is being established.

You can see from both that it takes about three seconds for the gRPC connection to settle. Our expectation with Camunda Cloud, however, is that there will be error events emitted as this happens - and there is no benefit exposing these to users. They are expected behaviour.

Once the connection is established, the behaviour from that point is the same as a self-hosted connection. If the Camunda Cloud connection doesn’t settle within five seconds, all the emitted errors are presented to the user (they are being buffered in the background).

The adaptive behaviour is completely disabled when the log level is set to DEBUG.

Happy.

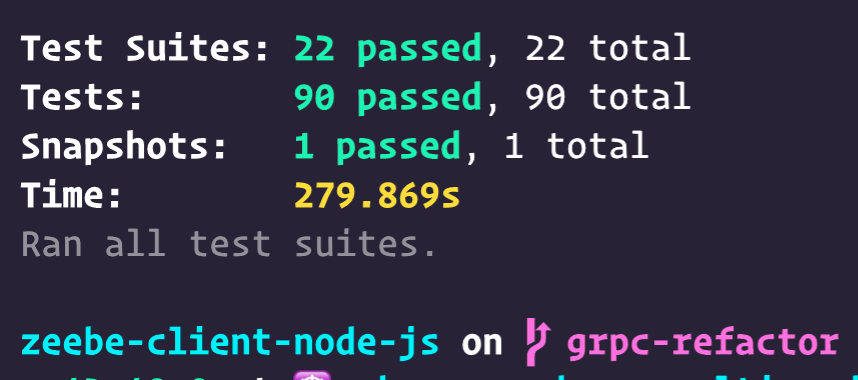

Next: get the tests to pass.

And….

Yassss!

(Although this is against the local broker. Not green on Camunda Cloud yet…)

About me: I’m a Developer Advocate at Camunda, working primarily on the Zeebe Workflow engine for Microservices Orchestration, and the maintainer of the Zeebe Node.js client. In my spare time, I build Magikcraft, a platform for programming with JavaScript in Minecraft.